- 6 min. reading time

So You Want to Be a Safety-Critical Software Developer?

Contents

We've observed sustained exponential growth in aviation when it comes to all-electric systems based on electric vertical takeoff and landing (eVTOL) aircraft. This has certainly attracted plenty of attention over the past decade. During this growth, it appears crystal clear that building the aircraft is the real challenge, and it is the reason that all urban air mobility (UAM) companies excel in their chosen domain. However, this also poses significant challenges for developing safety critical software that ensures the safety and reliability of these systems, which require rigorous and systematic software development processes.

Nothing is safer than a Stormtrooper guarding your code [1]

Particular importance is given to the hardware and software certification process for avionics systems.

The eVTOL Unmanned Aerial System (UAS) class of aircraft has complicated and specific characteristics that require a defined and rigorous certification process.

In the case of software products, any respectable company will know that any module under determined conditions can fall into the safety-critical code class. Specifically, this means that for a developer to become an expert in ways of producing clean code, it must be ready to pass safety-critical avionics criteria.

With this series of blog posts, we will venture into the unexplored depths of the basics of software for airborne operations, addressing topics such as Modified Condition and Decision Coverage Code Analysis, Safety Critical typical pitfalls, the life development process, Software Verification Process, and the Design Assurance Level etc.

Now that we are in the lane…Cabin crew, prepare to take off!

Are you ready to take off? [2]

For these reasons, I decided to dedicate this and future posts to unveil some good-to-know “recipes” for developers who may be interested (or encouraged) to go down this road in the future.

Let's start at the beginning, with the principal questions:

What is a safety-critical code?

if (IS_FLYING) {

printf(“yep!”)

} else {

Land(“gently”)

} Safety-critical code is the software that, if it fails, could result in loss of life, significant damage, or catastrophic events.

Aircraft as well as cars, weapons systems, medical devices (even if governed by different rules) are only a few examples of safety-critical software systems.

However, like the aforementioned, even manned eVTOL aircraft exhibit certain characteristics which affect the safety-critical level. For this reason, developers must comply with specific rules and certifications.

The European Aviation Safety Agency (EASA) and the U.S. Federal Aviation Administration (FAA) have defined a collection of specific standards to define a strategic guideline followed by any developer, architect, or software engineer. If you have existing knowledge of the avionic and airborne software world, you're probably thinking about DO-178C.

What is DO-178C?

DO-178C is the primary document referenced by certification authorities to ensure correctness, control, and confidence in software. So, once you've examined and understood DO-178C, you will realize that this is only the tip of an iceberg where the terms Do(s) and Ed(s) are quite redundant.

Unfortunately, going through each document does not solve your problem, because building a safety-critical avionic system presupposes a broader vision of what it means to be safety-critical.

What does that mean?

The production of some examples, compilers, libraries, parser, real-time simulators, test rigs, BITE(Built-In Test Equipment), recorder system, traceability tools, validation tools, and other tools used to manage and build the kinds of ecosystems we traditionally think of as safety-critical are actually safety-critical themselves.

Further, software that provides statistical info used to make decisions or trim safety-critical systems are also safety-critical. For example, an app that calculates how many cycles of charge or discharge a specific cell has performed for an eVTOL cluster of batteries is safety-critical. An incorrect result from that app may lead to a life-threatening situation.

And that's not all. We also need to consider that running external and safety-critical software on the same system may get classified as safety-critical when it might adversely affect the safety functions of the safety-critical system. It could do so by forking I/O, managing interrupts, or CPU/GPU capabilities, thus using up RAM, overwriting memory, affecting the threading process, or even simply sharing a near memory partition without enough buffer.

I know the above statement has the potential to wound a developer (and POs) to their core, but the solution is simpler than you may think.

And this is it: Make your software safer! But how?

Don't be upset - safer software is preventing overnight debugging sessions! [3]

Therefore, it's up to developers to focus on this code and define a feasible failsafe strategy and watchdogs for the system in use. That means raising a specific warning, suspending all operations, actuating safety countermeasures, and reporting errors.

While this does sound simple, it also comes with a bunch of tricky aspects like figuring out how to fail safely, how to analyze the weakness in your processes, and how to validate your code once it has been corrected.

Now, the next question is spontaneous, and may be difficult to apply in your daily job of developer.

Where is the magic wand?

Say "Expeliarmus" to danger! [4]

The closest thing I've ever found to a spell is based on the correct balance of having enough capacity to know every line of your code, reading/writing a requirements list, and ensuring correctness via construction techniques and capability of design simulation models.

What does it mean to design safer code as a developer?

Why choose just one? [5]

“The more you spend to protect your code from defects, the closer you will be to deploying good safety-critical code.”

Let's put that into perspective: say your washing machine is built to an avionics standard. Your family would never have experienced a dangerous failure, nor would you expect to encounter one for the entire duration of your life. In response you probably would have bought your washing machine from a limited series for only €1,000,000.

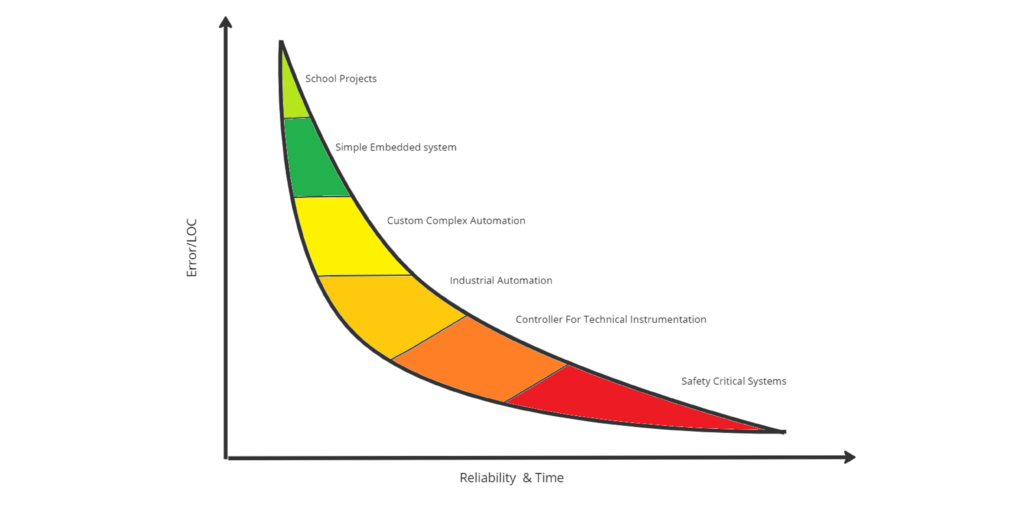

Hope you all like the red zone [6]

Looking at the graph above, we can see that migrating your code to make it safety-critical means drastically reducing the error ratio but increasing the time spent looking at your code and giving your code good reliability.

So, what's next?

Roll the dice and venture deeper into the dungeons! [7]